Testing Your Implementation on Ad Networks

Testing ad integrations is quite a tedious process. Since we work with third-party SDKs that can hardly be controlled, it can be challenging to automate the testing process. Nevertheless, to reduce testing time, we have developed and implemented a debug panel, which we will discuss in this article.

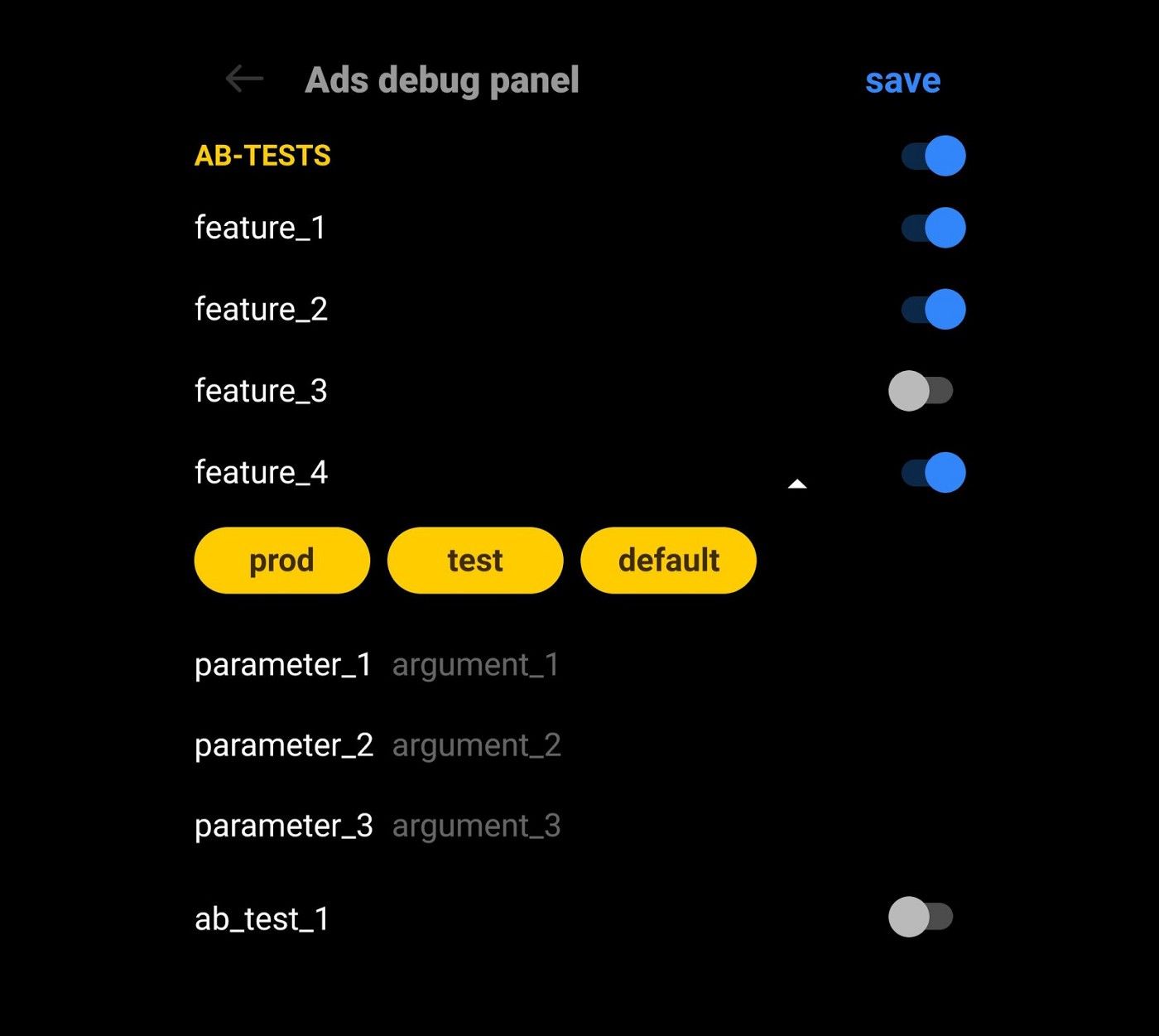

Previewing and configuring debug panels

All FunCorp features and experiments are released using the feature toggle mechanism. This features flags that help enable/disable specific sections of code. As a result, they make releases more stable and secure. The product, platform, and monetization teams at FunCorp all engage with these feature toggles because the ability to change feature/experiment configurations without directly releasing the app affects its safety, fault tolerance, and ultimately, the company’s revenue.

How Ads Are Received

FunCorp uses two ad receipt models: waterfall and bidding. Here, we will discuss the waterfall model.

The app first requests a mediator’s configuration and proceeds to a specific advertisement network after receiving it to display a banner or native ad. If the response is positive, the ad network attempts to upload the suggested content. At the end of the iteration, a message on the result is sent to the mediator. If any stage (mentioned above) fails, the entire procedure will be repeated. Eventually, hundreds or even thousands of requests may be sent to download a single banner or native ad.

Given that iFunny integrates about ten advertising SDKs and the ad behavior under the production parameters is unpredictable, testing during production is impractical. This is why 99% of all tasks involve test ads.

Test Ads

Ad networks provide test ads. The critical advantage of test ads is that they come with the same set of parameters and characteristics. Using test ads, you can be sure that a new piece of functionality or a bug fix does not interfere with any of the basic parameters or the general operation of the ad content. Technically, getting test ads is probably even more difficult than getting production ads. The waterfall stages are always the same. So, we have to work with traffic, mediators, features, test modes, and VPNs.

Manually

Obtaining test ads “manually” is even more difficult. For this purpose, we need a traffic-handling tool. FunCorp uses Charles. When using Charles, you must replace production parameters with test values in the following queries:

-

To your configuration with features and experiments

-

To the mediator (specifically for banner and native ads)

-

To the ad network (specifically for banner and native ads)

In some cases, you may need test modes (parameters that indicate the test ads request to the ad network), as well as placement modification (a parameter that indicates the request of a specific ad type (e.g., video) to the ad network). Besides, some ad networks require VPNs.

As you can see, you have to modify the traffic quite actively, which can result in the following undesirable effects:

Changes to the mediator’s admin panel that are not implemented simultaneously

- To get an ad from a specific ad network, you need to select the ad network in the mediator’s admin panel. Such changes take much time since configurations are cached. The wait time can take up to 15–20 minutes.

Substitutions expire

- The production ad parameters change at specific intervals

Some ad networks cannot be sniffed

- If you route the traffic through a proxy, some ad networks do not work at all

Situations that require testing without using a proxy

- At times, it is required to eliminate the effect of a sniffer on the ad operation

Situations when the test mode cannot be substituted

- Some networks have a hardcoded test mode, or it is transmitted in such a format that makes it difficult to make a substitution or requires a specific build for testing.

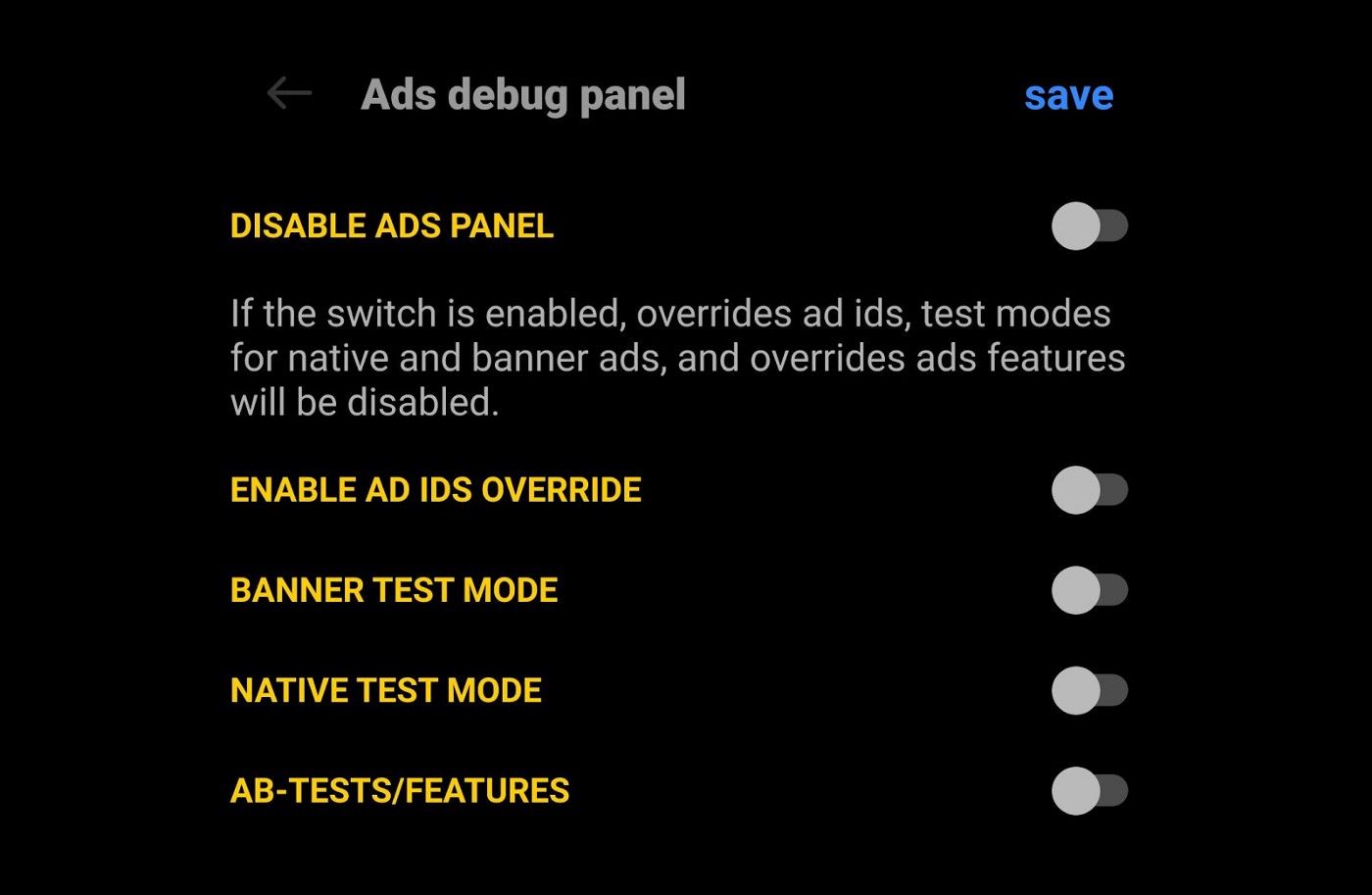

Debug panel

To make the test easier and optimize labor inputs, FunCorp has developed an ad debug panel. This is a separate interface that allows for configuring specific parameters for a client and replacing most of the parameter substitutions and configurations mentioned above. Let’s take a look at the debug panel itself and its pros and cons.

Stability

As with any test environment, the debug panel may be unstable. And we have made provisions against such risk. When necessary, the debug panel and its functionality can be manually disabled or substituted through the Charles parameters.

Enabling debug panel (DISABLE ADS PANEL)

Time input

Undoubtedly, the development and support of the debug panel take time. However, debug panel updates are pretty rare, and the reduction in manual testing and releases makes up for the time inputs.

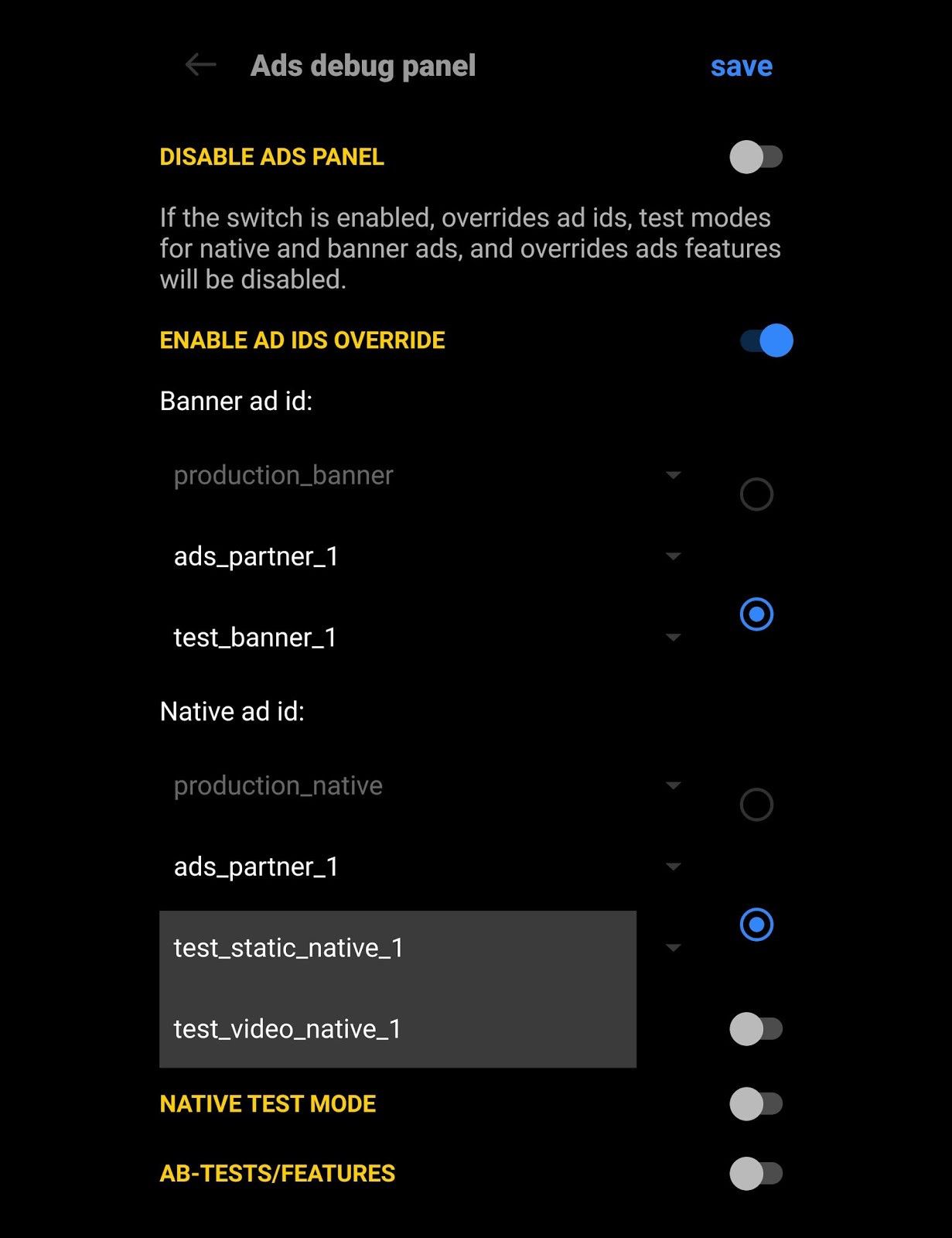

For example, the debug panel allows you to select either the production banner and native ad or test creatively from a specific ad network (including placement). All external changes are made beforehand. No additional changes are required. This also solves problems with caching the settings in the mediator admin panel.

Enabling test ads (ENABLE AD IDS OVERRIDE)

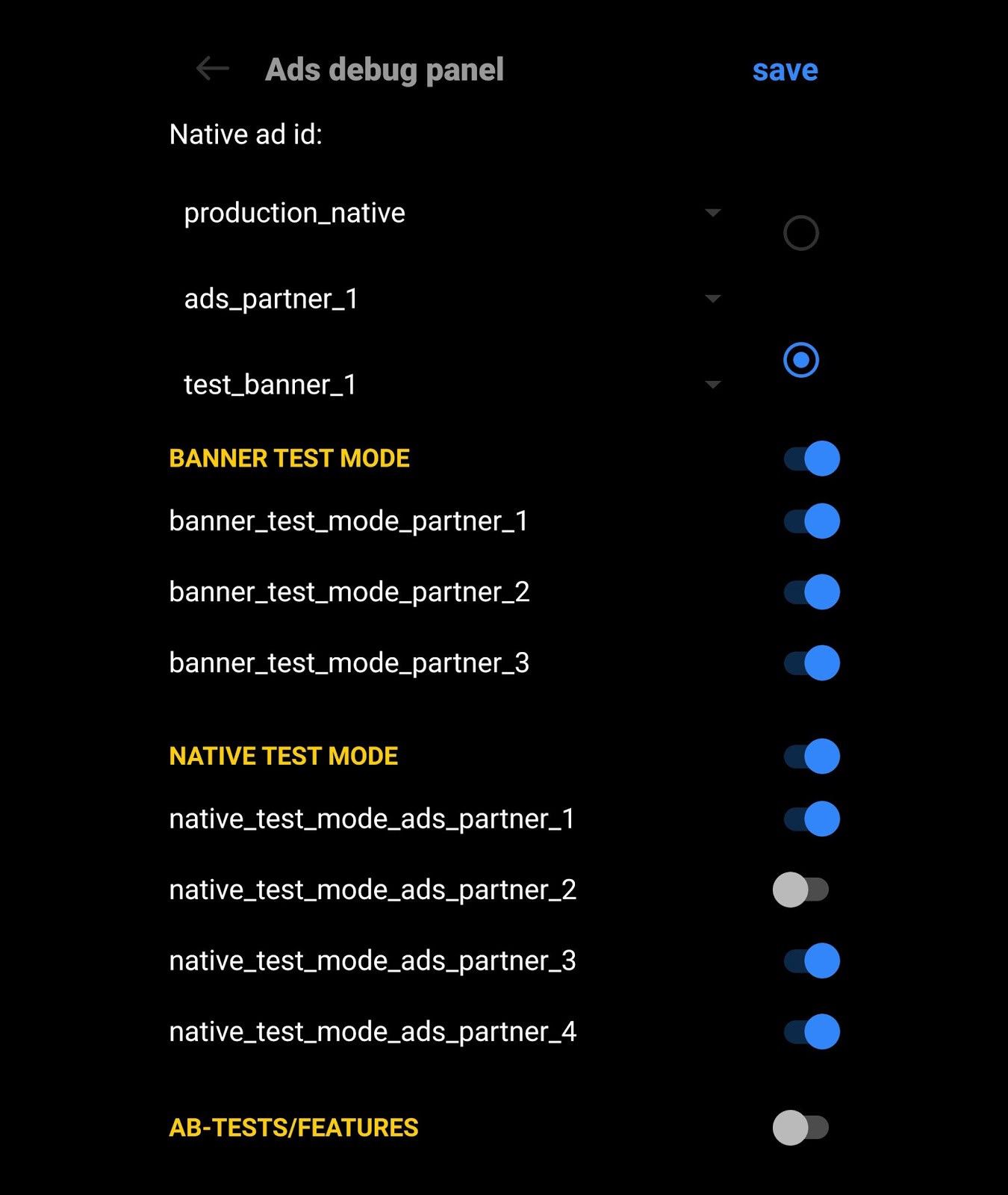

Moreover, you can also enable the test mode in the debug panel and save time on assembling a new build.

Banner and native ad test mode (BANNER TEST MODE & NATIVE TEST MODE)

Safety

From this perspective, there are no disadvantages. When using the debug panel, you do not have to change the parameters of features and experiments in production since all changes will be made locally. Aside from enabling/disabling features/experiments before production, the debug panel also allows for selecting production/test/default parameters or changing them manually.

Experiments/features (AB-TESTS/FEATURES)

Summary

Let us sum the project up. Of course, the development and support of the debug panel take time. However, it has been estimated that our debug panel helped reduce regression testing time by 50%. So don’t be afraid to develop your solutions if they can potentially help optimize your resources.

Medium.com

Medium.com